AI experience? It’s like saying "electricity experience" — too vague

Creator of Aiverse

When you say “web experience” or “mobile experience,” you know exactly what you’re talking about. So when you're talking about "designing for AI", how does “Conversational interfaces” sound? Too narrow? Too constrained & missing the bigger play (I may be biased).

So then, what’s next?

“Agentive Experience”

Visionary yet specific. Perfecto. Defining yet space for experiments. It’s also where the tech is heading — towards an active, autonomous user experience.

🤖 In the era of Agentive UX

So I’ve heard all the hype about “agentic experience” — machines doing everything for you, the “next big thing” and all that jazz.

I bought it… until I spent a month with Christopher Noessel’s Designing Agentive Technology (2017). Here’s the real deal on designing AI agents —

Just for some credibility, who’s Christopher Noessel?

Veteran in UX design & tech

Design Principal at IBM, focusing on design for AI,

Speculator, the sci-fi guy, creator of https://scifiinterfaces.com/

The guy you call when you want to know how machines should interact with humans

Note — I took the relevant key points from the book that changed the way I look at “designing for AI”. There are many more insights and examples in the book that I would highly recommend reading! While I used the book as a learning resource and intended to share my learnings through excerpts, if the author or the publishers have a problem with any of the content of the article, please reach out.

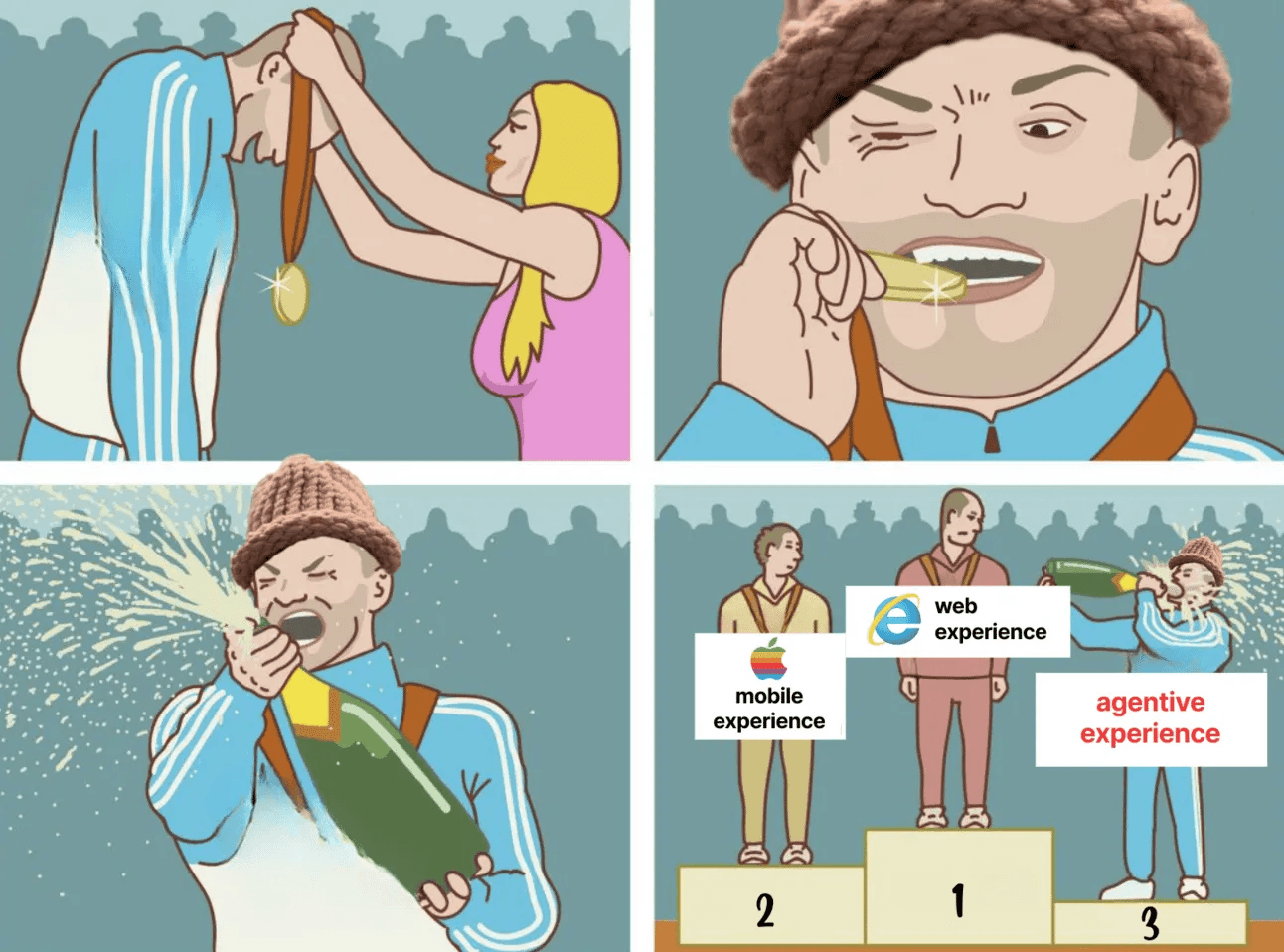

0/ Agentive vs Assistive vs Agentic

“Huh? They’re different?”

Yes, alloweth me enlighten thee.

Agentic = a back stage thing; agents are working on behalf of the primary AI. FYIFLN “ic” is greek, “agent” latin

Agentive = a front stage thing; the agents are working on behalf of the user. FYIFLN “ive” is latin, “agent” latin.

Assistive = helps you do a goal-based task, faster or with more efficiency. eg: spell-check, auto-correct, phone cameras’ point & click without any complex settings.

*FYIFLN = For Your Information From the Language Nerds.

source Christopher Noessel blog

1/ Agents are persistent loops

From designing intuitive controls for the digital land, focused on “getting a task done quickly in that moment” to shifting to having an agent look for things the user didn’t even know specifically existed.

The aim of the agent isn’t to help you find stuff which is already out there, but more about what could be out there in the future.

Your interests find you.

A.k.a lil auto-pilots.

What would an agentive camera look like?

Instead of you taking point & click photos, a small device on your tshirt takes photos every 30 seconds and at the end of the day/month (1) highlights the best ones, (2) clubs them into categories.

“You focused on living your life and having great photos, rather than taking photos”

— Example from the book

Kind of like Google Photos + always on device w/ camera.

Note to self: Think in goals, not tasks.

Task: Take photos

Goal: Preserve happy vacation memories for a group of friends.

Example: Narrative Clip

Task: Map out the best route from source to destination

Goal: Reach destination ASAP, based on real-time scenarios.

Example: Waze

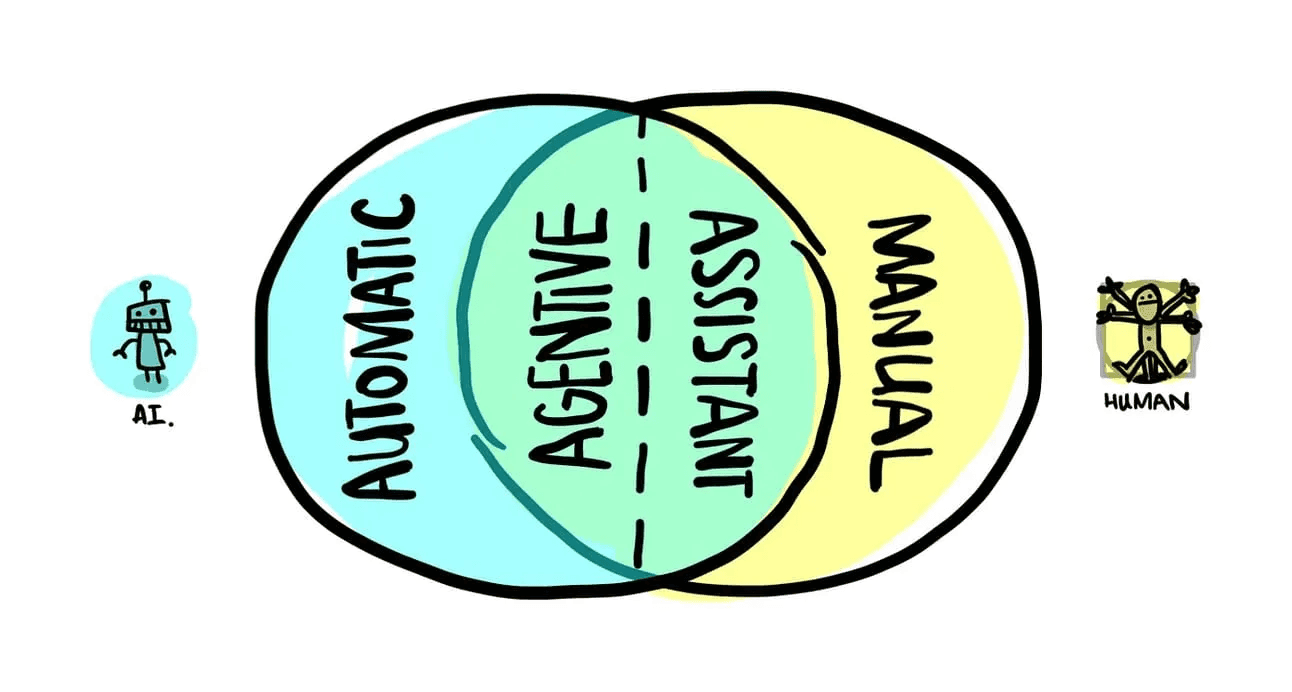

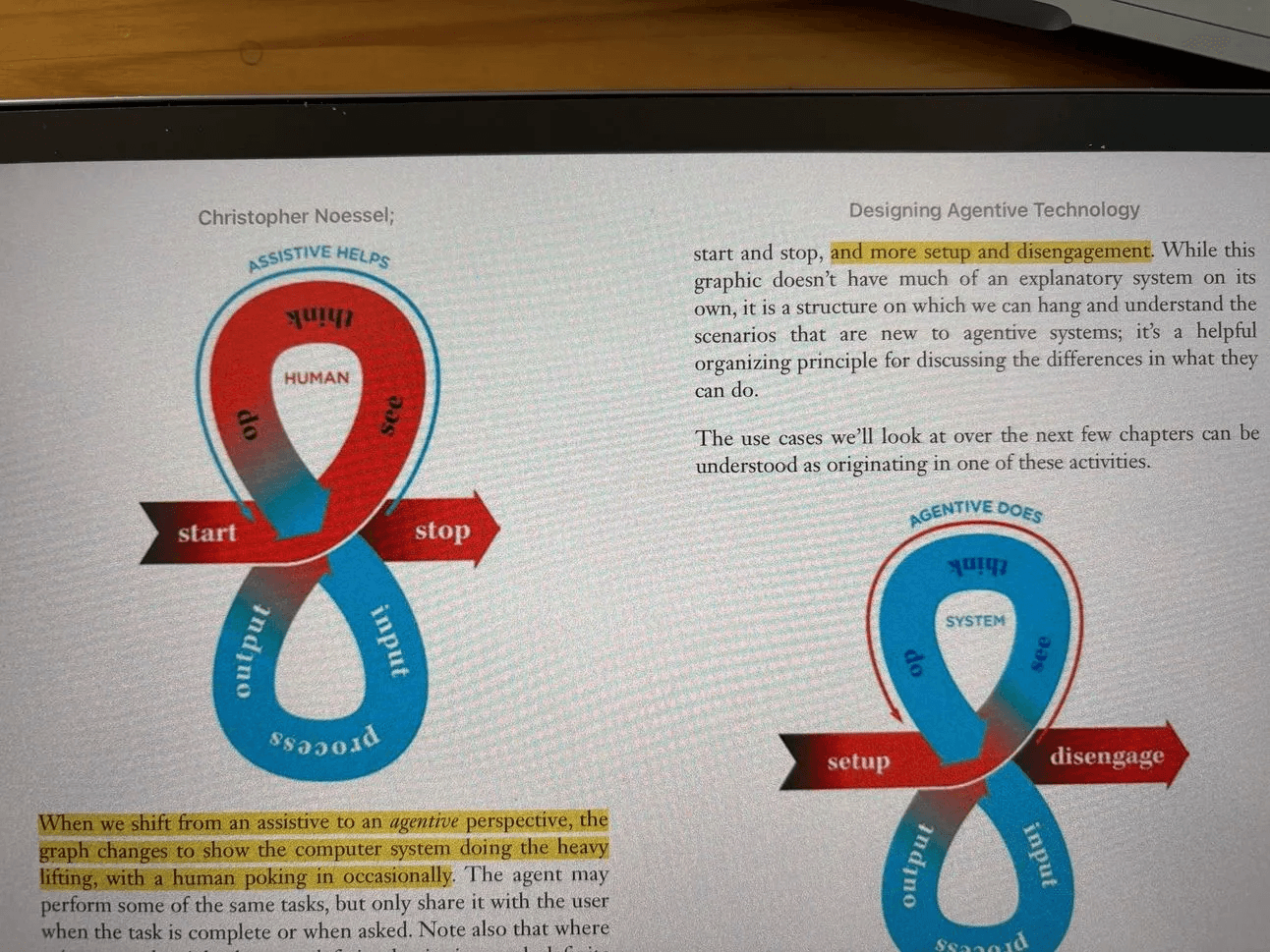

2/ Sense-Think-Do

See-think-do loop of Assistive vs Agentive — source author

Humans-Machines follow an input-process-output loop.

Agents follow a sense-think-do loop.

Sense how? Face recognition, Biometrics, Handwritten recognition, Activity recognition etc.

Think how? Algorithms to analyse the data and predict.

Do how? Via screens, message, sound, haptics, robotics, APIs etc.

An important aspect of designing these agents is to understand the user’s goal and preference. What is it that they want to accomplish? One way is to implicitly collect data (social media, private data, observe the user over time). Another way is to explicitly define them as rules.

Rules = Behaviours + Triggers + Exceptions

Rules: By which agents operate

Behaviours: Know how an action is performed

Triggers: Know when it’s time for action

Exceptions: Know when not to operate

Triggers need not be date/time stamps, they can be based on the temperature, keywords spoken, email received, biometric data, or event the # of cat videos.

Fill the water bowl for my dog (rule/behaviour), when the temperature rises above 20°C (trigger), up to a maximum of two bowls per day (constraints), except when it gives you the sad puppy eyes (exception)

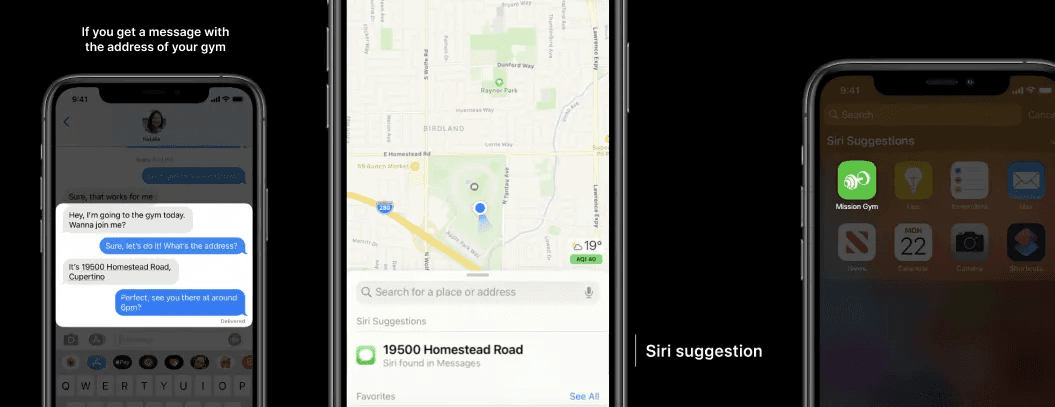

A prime example? Siri Suggestions. It’s from 2020 but on this journey of catching up with everything that’s happened in AI & Design, it perfectly showcases agentive tech.

If you get a message with your gym location that you’re going to later on in the day

Your maps with suggest that location up front

Around the time of your gym session, Siri will suggest that app in your swipe-down-drawer

How Siri suggestion works — source WWDC’20.

Absolutely obsessed. You have to watch behind-the-scenes. At the end of the day, an average user just wants smart defaults, not the option to customise or set their softwares.

3/ Agency is Proactive Intelligence

Agents are like a great waiter — there when needed, invisible when not. Some areas where agents can fit in to your product-usage-cycle —

Preparation: Predict actions and prepare themselves [objects] for use. Nest thermostat sets the temperature before you’ve arrived.

Optimization: Observe all possibilities and pick the right one for the user. Automatically switching between WIFI, cellular data and Bluetooth based on where you are and trying to do.

Advising: Observe in progress tasks and suggest better or alternate options. Waze suggesting faster routes based on an accident ahead.

Manipulation: Do on its own when it knows for sure what the user wants. Gmail adding items to your calendar based on an email.

Inhibition: Understand enough context to know what is welcome and suppress the rest. Your phone/laptop activating DND mode when you’re giving a presentation.

Finalization: What can agent end or close when it is no longer in use? Spotify stopping playback when your headphones are taken off.

Success metric of an agent?

When the user says, “Oh wow, that’s exactly what I wanted!”

Surprise and delight, every time.

4/ The spectrum of Auto vs Manual.

One of best experience of agentive tech I’ve experienced has been when driving a “smart” car.

Switching on “cruise control” & “lane driving”. Taking a sip of a chilled pina colada as I relax and let myself be driven into the sunset. Okay so maybe the second half is made up, but the first half is *chef’s kiss*. I can leave the agent running and as soon as I want to switch to manual, I can just take control and drive normally.

*smoooottthhh opperaatorrr..* (only F1 fans get it)

That’s what user want.

Auto mode — complete trust, “leaving it to the agentt”

Can-I-play-with mode — somewhat trust, “would like to play with it while letting the agent do its task”.

Manual mode — no trust, “let me handle this part”

In order to switch between each mode, there needs to be mutual observability, predictability and directability.

Tony Stark flexing agentive ux — image Quora

If an agentive solution is right for our users, and they aren’t asking for it, it’s up to us to introduce the concept and sell them the idea!

- Christopher Noessel

All of this is based on the Pre-ChatGPT era, before AI could take (1) natural language input, (2) create a plan, and (3) execute & create iterations.

The Post-ChatGPT era is wilder, & agentive UX more powerful! (as evident from WWDC’24 too)